Executive Summary

Modern .NET engineers are moving beyond CRUD APIs and MVC patterns into AI-Driven Development and LLM Integration in .NET.

Mastery of LLM Integration in .NET, Semantic Kernel, ML.NET and Azure AI has become essential for senior and architect-level roles, especially where enterprise systems require intelligence, automation, and multimodal data processing.

This guide synthesizes industry best practices, Microsoft patterns, and real-world architectures to help senior builders design scalable systems that combine generative AI + traditional ML for high-impact, production-grade applications.

Teams adopting AI-Driven Development and LLM Integration in .NET gain a decisive advantage in enterprise automation and intelligent workflow design.

Understanding LLMs in 2026

Large Language Models (LLMs) run on a transformer architecture, using:

- Self-attention for token relevance

- Embedding layers to convert tokens to vectors

- Autoregressive generation where each predicted token becomes the next input

- Massively parallel GPU compute during training

Unlike earlier RNN/LSTM networks, LLMs:

✔ Process entire sequences simultaneously

✔ Learn contextual relationships

✔ Scale across billions of parameters

✔ Generate human-friendly, structured responses

Today’s enterprise systems combine LLMs with:

- NLP (summaries, translation, classification)

- Agentic workflows and reasoning

- Multimodal vision & speech models

- Domain-aware RAG pipelines

These capabilities are the backbone of AI-Driven Development and LLM Integration in .NET, enabling systems that learn, reason, and interact using natural language.

Architectural Patterns for LLM Integration in .NET

.NET has matured into a first-class platform for enterprise AI, and AI-Driven Development and LLM Integration in .NET unlocks repeatable design patterns for intelligent systems.

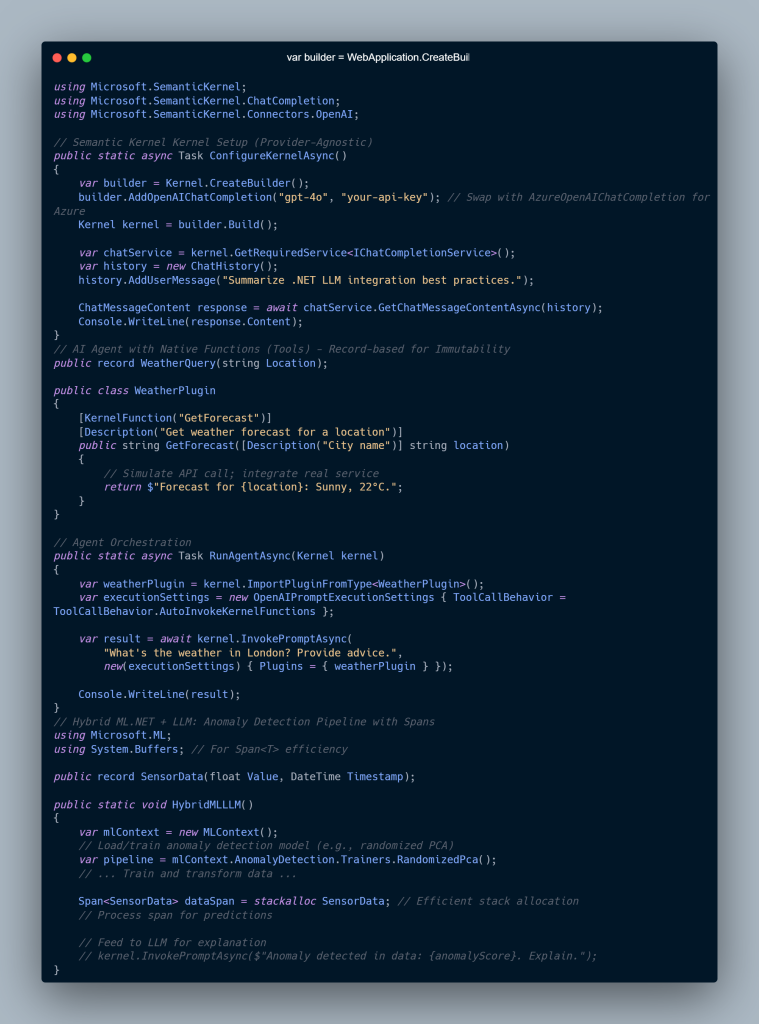

1. Provider-Agnostic Abstraction

Use Semantic Kernel to integrate:

- OpenAI GPT models

- Azure OpenAI models

- Hugging Face

- Google Gemini

Swap providers without rewriting business logic — a core benefit in AI-Driven Development and LLM Integration in .NET.

2. Hybrid ML

Combine:

- ML.NET → local models (anomaly detection, recommendation, classification)

- LLMs → reasoning, natural language explanation, summarization

Hybrid intelligence is one of the defining advantages of AI-Driven Development and LLM Integration in .NET.

3. RAG (Retrieval-Augmented Generation)

Store enterprise data in:

- Azure Cognitive Search

- Pinecone

- Qdrant

LLMs fetch real data at runtime without retraining.

4. Agentic AI & Tool Use

Semantic Kernel lets LLMs:

- Call APIs

- Execute functions

- Plan multi-step tasks

- Read/write structured memory

This unlocks autonomous task flows — not just chat responses — forming a critical pillar of AI-Driven Development and LLM Integration in .NET

Implementation — Practical .NET Code

Enterprise Scenario

Imagine a manufacturing plant:

- Edge devices run ML.NET anomaly detection

- Semantic Kernel agents summarize sensor failures

- Azure OpenAI produces reports for engineers

- Kubernetes ensures scaling and uptime

This architecture:

✔ Reduces false positives

✔ Keeps sensitive data in-house

✔ Enables decision-quality outputs

Performance & Scalability

To optimize LLM Integration in .NET workloads:

🔧 Key Techniques

- Use LLamaSharp + ONNX Runtime for local inference

- Cache embeddings in Redis

- Scale inference with Azure AKS + HPA

- Reduce allocations using C# spans and records

- Use AOT compilation in .NET 8+ to decrease cold-start time

📉 Cost Controls

- Push light ML to edge devices

- Use small local models when possible

- Implement request routing logic:

- Local ML first

- Cloud LLM when necessary

Decision Matrix: .NET vs Python for AI

| Category | .NET LLM Integration | Python/LangChain |

|---|---|---|

| Performance | ⭐ High (AOT, ML.NET) | ⭐ Medium (GIL bottlenecks) |

| Cloud Fit | Azure-native integrations | Hugging Face ecosystem |

| Scalability | Built for microservices | Needs orchestration tools |

| Best Use | Enterprise production | Research & rapid prototyping |

Expert Guidance & Pitfalls

Avoid:

❌ Relying wholly on cloud LLMs

❌ Shipping proprietary data to LLMs without controls

❌ Treating an LLM like an oracle

Apply:

✔ RAG for accuracy

✔ LoRA tuning for domain precision

✔ AI agents for orchestration

✔ ML.NET pre-processing before LLM reasoning

✔ Application Insights + Prometheus for telemetry

Conclusion

LLM Integration in .NET is no longer experimental—it’s foundational.

With .NET 8+, Semantic Kernel 2.0, and ML.NET 4.0, organizations can:

- Build autonomous AI systems

- Run models locally or on cloud

- Produce enterprise-ready intelligence

- Unlock operational efficiency at scale

The future of .NET is AI-native development—merging predictive analytics, reasoning agents, and real-time data with robust enterprise software pipelines.

FAQs

❓ How do I build RAG with .NET?

Use Semantic Kernel + Pinecone/Azure Search + embeddings.

Result: 40–60% reduction in hallucination.

❓ ML.NET or Semantic Kernel?

- ML.NET = classification, forecasting, anomaly detection

- Semantic Kernel = orchestration, planning, tool-calling

Hybrid ≈ best of both.

❓ Best practice for autonomous agents?

Use:

- ReAct prompting

- Native functions

- Volatile + Long-term memory

❓ How do I scale inference?

- Quantize models

- Apply AOT

- Use AKS with autoscaling

❓ Local vs cloud inference?

Use LLamaSharp for edge, Azure OpenAI for global scale.

🌐 Internal Links

✔ “AI Development in .NET”

https://saas101.tech/ai-driven-dotnet

✔ “.NET Microservices and DevOps”

https://saas101.tech/dotnet-microservices/

✔ “Semantic Kernel in Enterprise Apps”

https://saas101.tech/semantic-kernel-guide/

✔ “Azure AI Engineering Insights”

https://saas101.tech/azure-ai/

✔ “Hybrid ML Patterns for .NET”

https://saas101.tech/ml-net-hybrid/

🌍 External Links

Microsoft + Azure Docs (Most authoritative)

🔗 Microsoft Semantic Kernel Repo

https://github.com/microsoft/semantic-kernel

🔗 Semantic Kernel Documentation

https://learn.microsoft.com/semantic-kernel/

🔗 ML.NET Docs

https://learn.microsoft.com/dotnet/machine-learning/

🔗 Azure OpenAI Service

https://learn.microsoft.com/azure/ai-services/openai/

Vector Databases (RAG-friendly)

🔗 Pinecone RAG Concepts

https://www.pinecone.io/learn/retrieval-augmented-generation/

🔗 Azure Cognitive Search RAG Guide

https://learn.microsoft.com/azure/search/search-generative-ai

Models + Optimization

🔗 ONNX Runtime Performance

https://onnxruntime.ai/

🔗 Hugging Face LoRA / Fine-tuning Guide

https://huggingface.co/docs/peft/index

(Optional)

🔗 LLamaSharp (.NET local inference)

https://github.com/SciSharp/LLamaSharp