.NET 8 and Angular Apps with Keycloak

In the rapidly evolving landscape of 2026, identity management has shifted from being a peripheral feature to the backbone of secure system architecture. For software engineers navigating the .NET and Angular ecosystems, the challenge is no longer just “making it work,” but doing so in a way that is scalable, observable, and resilient against modern threats. This guide explores the sophisticated integration of Keycloak with .NET 8, moving beyond basic setup into the architectural nuances that define enterprise-grade security.

The Shift to Externalized Identity

Traditionally, developers managed user tables and password hashing directly within their application databases. However, the rise of compliance requirements and the complexity of features like Multi-Factor Authentication (MFA) have made internal identity management a liability. Keycloak, an open-source Identity and Access Management (IAM) solution, has emerged as the industry standard for externalizing these concerns.

By offloading authentication to Keycloak, your .NET 8 services become “stateless” regarding user credentials. They no longer store passwords or handle sensitive login logic. Instead, they trust cryptographically signed JSON Web Tokens (JWTs) issued by Keycloak. This separation of concerns allows your team to focus on business logic while Keycloak manages the heavy lifting of security protocols like OpenID Connect (OIDC) and OAuth 2.0.

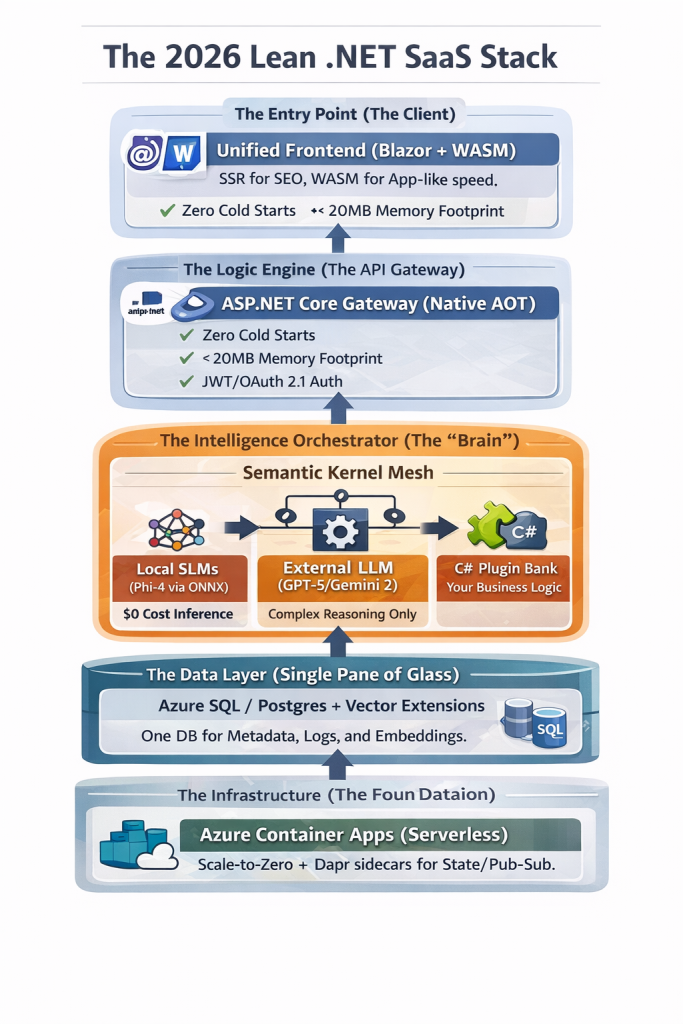

Architectural Patterns for 2026

Engineering the Backend: .NET 8 Implementation

The integration starts at the infrastructure level. In .NET 8, the Microsoft.AspNetCore.Authentication.JwtBearer library remains the primary tool for securing APIs. Modern implementations require a deep integration with Keycloak’s specific features, such as role-based access control (RBAC) and claim mapping.

Advanced Service Registration

In your Program.cs, the configuration must be precise. You aren’t just checking if a token exists; you are validating its issuer, audience, and the validity of the signing keys.

csharpbuilder.Services.AddAuthentication(JwtBearerDefaults.AuthenticationScheme)

.AddJwtBearer(options =>

{

options.Authority = builder.Configuration["Keycloak:Authority"];

options.Audience = builder.Configuration["Keycloak:ClientId"];

options.RequireHttpsMetadata = false;

options.TokenValidationParameters = new TokenValidationParameters

{

ValidateIssuer = true,

ValidIssuer = builder.Configuration["Keycloak:Authority"],

ValidateAudience = true,

ValidateLifetime = true

};

});

This configuration ensures that your .NET API automatically fetches the public signing keys from Keycloak’s .well-known/openid-configuration endpoint, allowing for seamless key rotation without manual intervention.

Bridging the Gap: Angular and Keycloak

For an Angular developer, the goal is a seamless User Experience (UX). Using the Authorization Code Flow with PKCE (Proof Key for Code Exchange) is the only recommended way to secure Single Page Applications (SPAs) in 2026. This flow prevents interception attacks and ensures that tokens are only issued to the legitimate requester.

Angular Bootstrapping

Integrating the keycloak-angular library allows the frontend to manage the login state efficiently. The initialization should occur at the application startup:

typescriptfunction initializeKeycloak(keycloak: KeycloakService) {

return () =>

keycloak.init({

config: {

url: 'http://localhost:8080',

realm: 'your-realm',

clientId: 'angular-client'

},

initOptions: {

onLoad: 'check-sso',

silentCheckSsoRedirectUri: window.location.origin + '/assets/silent-check-sso.html'

}

});

}

When a user is redirected back to the Angular app after a successful login, the application receives an access_token. This token is then appended to the Authorization header of every subsequent HTTP request made to the .NET backend using an Angular Interceptor.

DIY Tutorial: Implementing Secure Guards

To protect specific routes, such as an admin dashboard, you can implement a KeycloakAuthGuard. This guard checks if the user is logged in and verifies if they possess the required roles defined in Keycloak.

typescript@Injectable({ providedIn: 'root' })

export class AuthGuard extends KeycloakAuthGuard {

constructor(protected override readonly router: Router, protected readonly keycloak: KeycloakService) {

super(router, keycloak);

}

public async isAccessAllowed(route: ActivatedRouteSnapshot, state: RouterStateSnapshot) {

if (!this.authenticated) {

await this.keycloak.login({ redirectUri: window.location.origin + state.url });

}

const requiredRoles = route.data['roles'];

if (!requiredRoles || requiredRoles.length === 0) return true;

return requiredRoles.every((role) => this.roles.includes(role));

}

}

Customizing Keycloak: The User Storage SPI

One of the most powerful features for enterprise developers is the User Storage Service Provider Interface (SPI). If you are migrating a legacy system where users are already stored in a custom SQL Server database, you don’t necessarily have to migrate them to Keycloak’s internal database.

By implementing a custom User Storage Provider in Java, you can make Keycloak “see” your existing .NET database as a user source. This allows you to leverage Keycloak’s security features while maintaining your original data structure for legal or enterprise projects.

Real-World Implementation: The Reference Repository

To see these concepts in action, the Black-Cockpit/NETCore.Keycloak repository serves as an excellent benchmark. It demonstrates:

- Automated Token Management: Handling the lifecycle of access and refresh tokens.

- Fine-Grained Authorization: Using Keycloak’s UMA 2.0 to define complex permission structures.

- Clean Architecture Integration: How to cleanly separate security configuration from your domain logic.

Conclusion

Integrating Keycloak with .NET 8 and Angular is not merely a technical task; it is a strategic architectural decision. By adopting OIDC and externalized identity, you ensure that your applications are built on a foundation of “Security by Design”. As we move through 2026, the ability to orchestrate these complex identity flows will remain a hallmark of high-level full-stack engineering.