A Modern, API-First Blueprint for Scalable Enterprise Systems

Executive Summary

Modern software systems are no longer built for a single UI or device. Web apps, mobile apps, IoT devices, partner integrations, and third-party consumers all demand access to the same business capabilities—without being tightly coupled to presentation logic.

This reality has accelerated the adoption of Headless Architecture, an API-first design approach where backend systems are completely decoupled from frontend delivery channels.

In parallel, gRPC has emerged as a high-performance communication protocol for internal microservice interactions, especially within .NET-based enterprise platforms.

This article provides a comprehensive, architect-level guide to:

-

Headless architecture principles in modern .NET systems

-

API-first backend design and frontend decoupling strategies

-

Where gRPC fits—and where it doesn’t

-

When to choose gRPC vs REST in headless architectures

-

Real-world enterprise implementation patterns

-

Best practices for scalability, security, and maintainability

This guide is written for senior .NET developers, architects, and technical leads designing systems intended to scale beyond a single UI or platform.

What Is Headless Architecture?

Headless architecture is a system design approach where:

-

The backend contains only business logic and APIs

-

The frontend is completely decoupled

-

No UI assumptions exist inside backend services

In a headless system, the backend becomes a pure capability provider.

Key Characteristics

-

API-first design

-

Multiple frontend consumers

-

Independent deployment cycles

-

Technology-agnostic frontends

-

Long-term scalability

The backend exposes functionality through APIs, while any number of frontends—React, Angular, mobile apps, kiosks, or third-party systems—consume those APIs independently.

Why Headless Architecture Matters in Enterprise .NET Systems

Traditional monolithic .NET applications often mix:

-

Business logic

-

UI rendering

-

State management

-

API concerns

This coupling creates friction when:

-

Adding new client platforms

-

Scaling teams independently

-

Integrating with partners

-

Modernizing legacy frontends

Headless architecture solves these problems by enforcing clear architectural boundaries.

Enterprise Benefits

-

Faster frontend innovation

-

Reduced regression risk

-

Easier cloud scaling

-

Clear ownership between teams

-

Better long-term maintainability

API-First Design in .NET: The Foundation of Headless Systems

A headless backend must be API-first, meaning APIs are treated as first-class products—not implementation details.

In .NET, this typically means:

-

ASP.NET Core Web APIs

-

Strong contract definitions

-

Versioned endpoints

-

Explicit backward compatibility

API-First Principles

-

Design contracts before implementation

-

Treat APIs as public interfaces—even internally

-

Never expose UI assumptions

-

Optimize for long-term evolution

This is where protocol choice becomes critical.

REST vs gRPC in Headless Architectures

Headless architecture does not mandate a single protocol. Instead, different protocols serve different roles.

REST: External Consumption Layer

REST remains ideal for:

-

Browsers

-

Mobile apps

-

Third-party consumers

-

SEO-friendly platforms

Why REST works well externally:

-

Human-readable JSON

-

Wide tooling support

-

Easy debugging

-

Cache-friendly

-

Works naturally over HTTP

gRPC: Internal Service-to-Service Communication

gRPC is not a replacement for REST in headless architectures—it is a complement.

gRPC excels when:

-

Microservices communicate internally

-

Low latency is critical

-

Strong typing is required

-

High throughput is expected

Why Enterprises Use gRPC Internally

-

Binary serialization (Protocol Buffers)

-

Strict contracts

-

Excellent performance

-

Language-agnostic communication

-

Built-in streaming support

How gRPC Fits into a Headless .NET Architecture

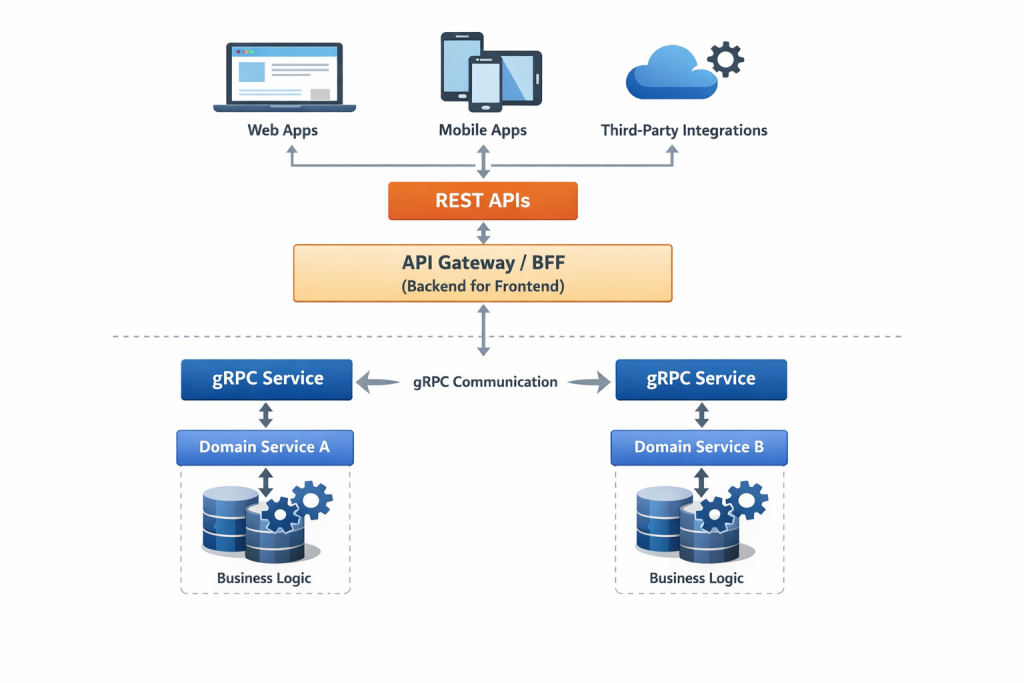

A modern enterprise architecture often looks like this:

Architectural Roles

-

REST APIs

→ Expose headless capabilities to external consumers -

API Gateway / BFF (Backend for Frontend)

→ Aggregates, secures, and adapts APIs per client type -

gRPC Services

→ High-performance internal communication between microservices

This separation preserves headless principles without forcing gRPC onto frontends, where it is often impractical.

Decoupling Presentation from Business Logic in .NET

True headless architecture requires discipline, not just APIs.

Best Practices

-

No UI logic in backend projects

-

No frontend-specific DTOs in domain layers

-

Domain logic isolated from transport concerns

-

Contracts owned and versioned explicitly

In .NET, this often aligns with:

-

Clean Architecture

-

Hexagonal Architecture

-

Vertical Slice Architecture

Enterprise Implementation Patterns

Pattern 1: Backend for Frontend (BFF)

Each frontend gets its own tailored backend façade.

Benefits:

-

Avoids frontend overfetching

-

Simplifies UI logic

-

Improves security isolation

Pattern 2: API Gateway + gRPC Mesh

-

Gateway handles REST, auth, rate limits

-

Microservices communicate via gRPC

Benefits:

-

High internal performance

-

Clean separation of concerns

-

Easier scaling under load

Pattern 3: Hybrid Headless Platform

-

Public REST APIs for partners

-

Internal gRPC for core services

-

Event-driven messaging for async workflows

This pattern is common in large-scale .NET systems.

Security Considerations in Headless + gRPC Systems

Enterprise headless systems must address:

-

Authentication (OAuth2, OpenID Connect)

-

Authorization (policy-based access)

-

Transport security (TLS everywhere)

-

Service identity (mTLS internally)

gRPC works particularly well with mutual TLS for internal service trust.

Common Mistakes Architects Should Avoid

-

❌ Exposing gRPC directly to browsers

-

❌ Mixing UI logic into APIs

-

❌ Treating APIs as internal shortcuts

-

❌ Ignoring versioning strategy

-

❌ Over-engineering protocol usage

Headless architecture is about clarity and separation, not complexity.

When NOT to Use gRPC

Despite its strengths, gRPC is not always appropriate:

-

Public APIs consumed by unknown clients

-

SEO-dependent web platforms

-

Simple CRUD systems

-

Teams without protocol buffer expertise

Architecture is about fit, not fashion.

Final Thoughts: Designing for the Next 5 Years

Headless architecture paired with gRPC is not a trend—it is a long-term architectural strategy.

For .NET architects, the winning approach is:

-

REST for external headless APIs

-

gRPC for internal microservice communication

-

Strong architectural boundaries

-

API-first thinking from day one

This combination delivers scalability, performance, and adaptability—the three qualities enterprise systems must sustain over time.

About the Author

This article is written from an enterprise .NET architecture perspective, informed by real-world experience designing scalable, cloud-native systems using ASP.NET Core, microservices, and distributed architectures.

For a deep dive into Azure Kubernetes services, check out our Article https://saas101.tech/net-core-microservices-and-azure-kubernetes-service/

📖 Headless Architecture & API-First Design

🔗 What Headless Architecture Means & Why It Matters — Comprehensive intro and explanation of headless systems and API-driven frontend/backend separation. Headless Architecture in Software Development (Medium)

🔗 Optimize Digital Strategy with Headless API Architecture — Enterprise view on how headless APIs support scalability and modular frontends. Optimize Digital Strategy with Headless API Architecture (Contentstack)

🔗 Headless Architecture: Key Features & Benefits — Enterprise blog explaining seven core aspects you should consider. Headless Architecture: Seven Things to Know (Contentful)

🔗 Headless CMS Definition — Wikipedia entry defining headless CMS and API-centric backend systems. Headless Content Management System (Wikipedia)

🔗 Headless Commerce Explained — Example of headless architecture in eCommerce with REST APIs. Headless Commerce (Wikipedia)

🧠 Architectural Context & Design Patterns

🔗 Microservices vs Headless Architecture — TechTarget comparison explaining overlaps and differences. Microservices vs Headless Architecture (TechTarget)

🔗 Web App Architecture & API-First Patterns — Explains API-first principles in scalable systems (including microservices pattern). Web Application Architecture Guide (WeDoWebApps)

🔗 MACH Architecture Concept (Microservices, API-First, Cloud, Headless) — A practical overview. MACH Architecture Guide (Buzzclan)

📌 Technical Research (for depth)

🔗 REST vs gRPC for Microservices Communication — Academic comparison on when each protocol performs best. REST and gRPC Efficiency in Microservices (arXiv)