Building AI-Native .NET Applications with Azure OpenAI, Semantic Kernel, and ML.NET

Executive Summary

Modern organizations are rapidly adopting AI-Native .NET approaches to remain competitive in an AI-accelerated landscape. Traditional .NET applications are no longer enough—teams now need systems that can reason over data, automate decision-making, and learn from patterns. Whether you’re building intelligent chatbots, document analysis pipelines, customer-support copilots, or predictive forecasting features, AI-Native .NET development using Azure OpenAI, Semantic Kernel, and ML.NET provides the optimal foundation.

This guide addresses a critical challenge: how to architect and implement artificial intelligence in .NET without reinventing the wheel, breaking clean architecture, or introducing untestable components. The real-world problem is clear—developers need a unified approach to orchestrate LLM calls, manage long-term memory and context, handle function calling, and integrate traditional machine-learning models inside scalable systems.

By combining Azure OpenAI for reasoning, Semantic Kernel for orchestration, and ML.NET for structured predictions, you can build production-ready, AI-Native .NET applications with clean architecture, testability, and maintainability. This tutorial synthesizes industry best practices into a step-by-step roadmap you can use immediately.

🛠️ Prerequisites for Building AI-Native .NET Apps

Before getting started, ensure you have the following setup.

Development Environment

-

.NET 8.0 SDK or higher

-

Visual Studio 2022 or VS Code with C# Dev Kit installed

-

Git for version control

-

Optional: Docker Desktop for local container testing

Azure Requirements

-

Active Azure subscription (free tier works to get started)

-

Azure OpenAI resource deployed with a GPT-4, GPT-4o, or newer model

-

Optional: Azure AI Search (for RAG/document intelligence scenarios)

-

Securely stored credentials:

-

API keys

-

Endpoint URLs

-

Managed Identity if working keyless

-

Required NuGet Packages:

– `Microsoft.SemanticKernel` (latest stable version)

– `Microsoft.Extensions.DependencyInjection`

– `Microsoft.Extensions.Logging`

– `Microsoft.Extensions.Configuration.UserSecrets`

– `ML.NET` (for traditional ML integration)

– `Azure.AI.OpenAI` (for direct Azure OpenAI calls)

Knowledge Prerequisites:

– Solid understanding of C# and async/await patterns

– Familiarity with dependency injection and configuration management

– Basic knowledge of REST APIs and authentication

– Understanding of LLM concepts (tokens, temperature, context windows)

Step-by-Step Implementation

Step 1: Project Setup and Configuration

Create a new .NET console application and configure your project structure:

dotnet new console -n AINativeDotNet

cd AINativeDotNet

dotnet add package Microsoft.SemanticKernel

dotnet add package Microsoft.Extensions.DependencyInjection

dotnet add package Microsoft.Extensions.Logging.Console

dotnet add package Microsoft.Extensions.Configuration.UserSecrets

dotnet add package Azure.AI.OpenAI

dotnet user-secrets init

Store your Azure OpenAI credentials securely using user secrets:

dotnet user-secrets set "AzureOpenAI:Endpoint" "https://your-resource.openai.azure.com/"

dotnet user-secrets set "AzureOpenAI:ApiKey" "your-api-key"

dotnet user-secrets set "AzureOpenAI:DeploymentName" "gpt-4o"

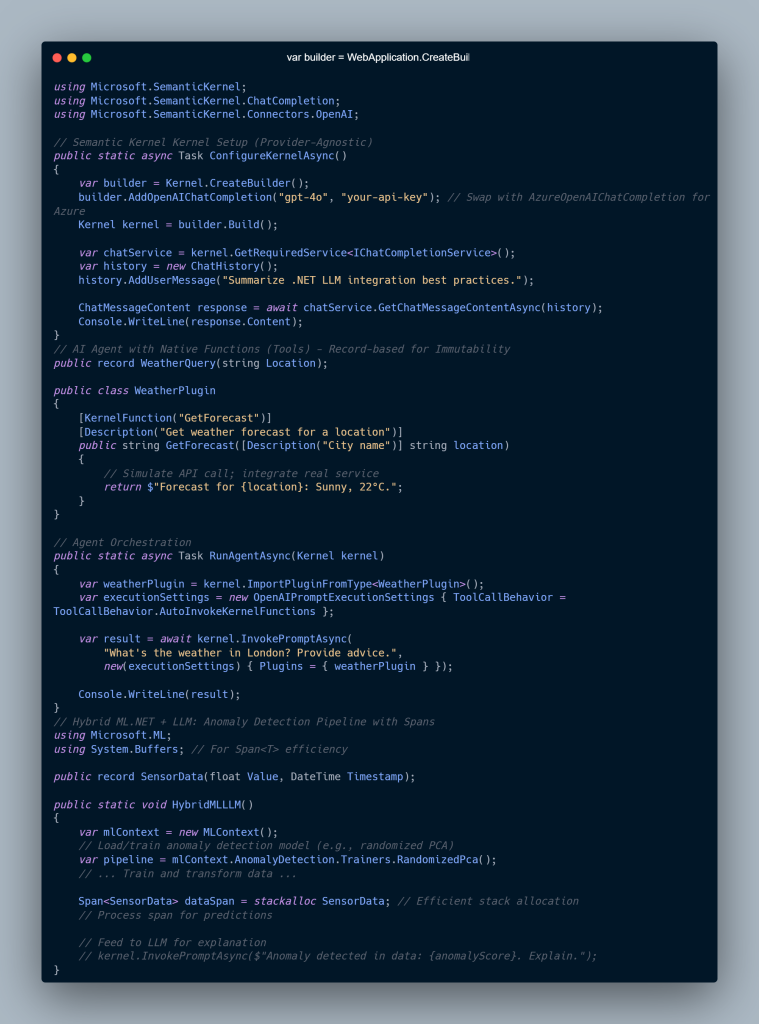

Step 2: Configure the Semantic Kernel

The Kernel is your central orchestrator. Set it up with proper dependency injection:

using Microsoft.SemanticKernel;

using Microsoft.Extensions.Configuration;

using Microsoft.Extensions.DependencyInjection;

using Microsoft.Extensions.Logging;

public static class KernelConfiguration

{

public static IServiceCollection AddAIServices(

this IServiceCollection services,

IConfiguration configuration)

{

var endpoint = configuration["AzureOpenAI:Endpoint"]

?? throw new InvalidOperationException("Missing Azure OpenAI endpoint");

var apiKey = configuration["AzureOpenAI:ApiKey"]

?? throw new InvalidOperationException("Missing Azure OpenAI API key");

var deploymentName = configuration["AzureOpenAI:DeploymentName"]

?? throw new InvalidOperationException("Missing deployment name");

var builder = Kernel.CreateBuilder()

.AddAzureOpenAIChatCompletion(deploymentName, endpoint, apiKey)

.AddLogging(logging => logging.AddConsole());

services.AddSingleton(builder.Build());

return services;

}

}

Step 3: Create a Chat Service with Context Management

Build a reusable chat service that manages conversation history and execution settings:

using Microsoft.SemanticKernel;

using Microsoft.SemanticKernel.ChatCompletion;

public class ChatService(Kernel kernel)

{

private readonly ChatHistory _chatHistory = new();

private const string SystemPrompt =

"You are a helpful AI assistant. Provide clear, concise answers.";

public async Task SendMessageAsync(string userMessage)

{

var chatCompletionService = kernel.GetRequiredService();

// Initialize chat history with system prompt on first message

if (_chatHistory.Count == 0)

{

_chatHistory.AddSystemMessage(SystemPrompt);

}

_chatHistory.AddUserMessage(userMessage);

var executionSettings = new PromptExecutionSettings

{

Temperature = 0.7,

TopP = 0.9,

MaxTokens = 2000

};

var response = await chatCompletionService.GetChatMessageContentAsync(

_chatHistory,

executionSettings,

kernel);

_chatHistory.AddAssistantMessage(response.Content ?? string.Empty);

return response.Content ?? string.Empty;

}

public void ClearHistory()

{

_chatHistory.Clear();

}

public IReadOnlyList GetHistory() => _chatHistory.AsReadOnly();

}

Step 4: Implement Function Calling (Plugins)

Create native functions that the AI can invoke automatically:

using Microsoft.SemanticKernel;

using System.ComponentModel;

public class CalculatorPlugin

{

[KernelFunction("add")]

[Description("Adds two numbers together")]

public static int Add(

[Description("The first number")] int a,

[Description("The second number")] int b)

{

return a + b;

}

[KernelFunction("multiply")]

[Description("Multiplies two numbers")]

public static int Multiply(

[Description("The first number")] int a,

[Description("The second number")] int b)

{

return a * b;

}

}

public class WeatherPlugin

{

[KernelFunction("get_weather")]

[Description("Gets the current weather for a city")]

public async Task GetWeather(

[Description("The city name")] string city)

{

// In production, call a real weather API

await Task.Delay(100);

return $"The weather in {city} is sunny, 72°F";

}

}

Register plugins with the kernel:

var kernel = builder.Build();

kernel.Plugins.AddFromType();

kernel.Plugins.AddFromType();

Step 5: Build a RAG (Retrieval-Augmented Generation) System

For document-aware responses, integrate Azure AI Search:

using Azure.Search.Documents;

using Azure.Search.Documents.Models;

using Azure;

public class DocumentRetrievalService(SearchClient searchClient)

{

public async Task<List> RetrieveRelevantDocumentsAsync(

string query,

int topResults = 3)

{

var searchOptions = new SearchOptions

{

Size = topResults,

Select = { "content", "source" }

};

var results = await searchClient.SearchAsync(

query,

searchOptions);

var documents = new List();

await foreach (var result in results.GetResultsAsync())

{

if (result.Document.TryGetValue("content", out var content))

{

documents.Add(content.ToString() ?? string.Empty);

}

}

return documents;

}

}

public class RAGChatService(

Kernel kernel,

DocumentRetrievalService documentService)

{

private readonly ChatHistory _chatHistory = new();

public async Task SendMessageWithContextAsync(string userMessage)

{

// Retrieve relevant documents

var documents = await documentService.RetrieveRelevantDocumentsAsync(userMessage);

// Build context from documents

var context = string.Join("\n\n", documents);

var enrichedPrompt = $"""

Based on the following documents:

{context}

Answer this question: {userMessage}

""";

_chatHistory.AddUserMessage(enrichedPrompt);

var chatCompletionService = kernel.GetRequiredService();

var response = await chatCompletionService.GetChatMessageContentAsync(

_chatHistory,

kernel: kernel);

_chatHistory.AddAssistantMessage(response.Content ?? string.Empty);

return response.Content ?? string.Empty;

}

}

Step 6: Integrate ML.NET for Hybrid Intelligence

Combine LLMs with traditional ML for scenarios requiring fast, local inference:

using Microsoft.ML;

using Microsoft.ML.Data;

public class SentimentData

{

[LoadColumn(0)]

public string Text { get; set; } = string.Empty;

[LoadColumn(1)]

[ColumnName("Label")]

public bool Sentiment { get; set; }

}

public class SentimentPrediction

{

[ColumnName("PredictedLabel")]

public bool Prediction { get; set; }

public float Probability { get; set; }

public float Score { get; set; }

}

public class HybridAnalysisService(Kernel kernel)

{

private readonly MLContext _mlContext = new();

private ITransformer? _model;

public async Task AnalyzeTextAsync(string text)

{

// Step 1: Quick sentiment classification with ML.NET

var sentimentScore = PredictSentiment(text);

// Step 2: If sentiment is neutral or mixed, use LLM for deeper analysis

if (sentimentScore.Probability < 0.7)

{

var chatService = new ChatService(kernel);

var deepAnalysis = await chatService.SendMessageAsync(

$"Provide a detailed sentiment analysis of: {text}");

return new AnalysisResult

{

QuickSentiment = sentimentScore.Prediction,

Confidence = sentimentScore.Probability,

DetailedAnalysis = deepAnalysis

};

}

return new AnalysisResult

{

QuickSentiment = sentimentScore.Prediction,

Confidence = sentimentScore.Probability,

DetailedAnalysis = null

};

}

private SentimentPrediction PredictSentiment(string text)

{

// In production, load a pre-trained model

var predictionEngine = _mlContext.Model.CreatePredictionEngine<SentimentData, SentimentPrediction>(_model!);

return predictionEngine.Predict(new SentimentData { Text = text });

}

}

public class AnalysisResult

{

public bool QuickSentiment { get; set; }

public float Confidence { get; set; }

public string? DetailedAnalysis { get; set; }

}

Step 7: Complete Program.cs with Dependency Injection

Wire everything together:

using Microsoft.Extensions.Configuration;

using Microsoft.Extensions.DependencyInjection;

using Microsoft.Extensions.Logging;

var configuration = new ConfigurationBuilder()

.AddUserSecrets()

.Build();

var services = new ServiceCollection();

services

.AddAIServices(configuration)

.AddSingleton()

.AddLogging(logging => logging.AddConsole());

var serviceProvider = services.BuildServiceProvider();

var chatService = serviceProvider.GetRequiredService();

Console.WriteLine("AI-Native .NET Chat Application");

Console.WriteLine("Type 'exit' to quit\n");

while (true)

{

Console.Write("You: ");

var userInput = Console.ReadLine();

if (userInput?.Equals("exit", StringComparison.OrdinalIgnoreCase) ?? false)

break;

if (string.IsNullOrWhiteSpace(userInput))

continue;

try

{

var response = await chatService.SendMessageAsync(userInput);

Console.WriteLine($"Assistant: {response}\n");

}

catch (Exception ex)

{

Console.WriteLine($"Error: {ex.Message}\n");

}

}

Production-Ready C# Examples

Advanced: Streaming Responses

For better UX, stream responses token-by-token:

public class StreamingChatService(Kernel kernel)

{

public async IAsyncEnumerable SendMessageStreamAsync(

string userMessage,

[EnumeratorCancellation] CancellationToken cancellationToken = default)

{

var chatCompletionService = kernel.GetRequiredService();

var chatHistory = new ChatHistory { new(AuthorRole.User, userMessage) };

await foreach (var chunk in chatCompletionService.GetStreamingChatMessageContentAsync(

chatHistory,

kernel: kernel,

cancellationToken: cancellationToken))

{

if (!string.IsNullOrEmpty(chunk.Content))

{

yield return chunk.Content;

}

}

}

}

// Usage

var streamingService = serviceProvider.GetRequiredService();

await foreach (var token in streamingService.SendMessageStreamAsync("Hello"))

{

Console.Write(token);

}

Advanced: Error Handling and Retry Logic

Implement resilient patterns for production:

using Polly;

using Polly.CircuitBreaker;

public class ResilientChatService(Kernel kernel, ILogger logger)

{

private readonly IAsyncPolicy _retryPolicy = Policy

.Handle()

.Or()

.OrResult(r => string.IsNullOrEmpty(r))

.WaitAndRetryAsync(

retryCount: 3,

sleepDurationProvider: attempt => TimeSpan.FromSeconds(Math.Pow(2, attempt)),

onRetry: (outcome, timespan, retryCount, context) =>

{

logger.LogWarning(

"Retry {RetryCount} after {Delay}ms",

retryCount,

timespan.TotalMilliseconds);

});

public async Task SendMessageAsync(string userMessage)

{

return await _retryPolicy.ExecuteAsync(async () =>

{

var chatCompletionService = kernel.GetRequiredService();

var chatHistory = new ChatHistory { new(AuthorRole.User, userMessage) };

var response = await chatCompletionService.GetChatMessageContentAsync(

chatHistory,

kernel: kernel);

return response.Content ?? throw new InvalidOperationException("Empty response");

});

}

}

Common Pitfalls & Troubleshooting

**Pitfall 1: Token Limit Exceeded**

– **Problem:** Long conversations cause “context window exceeded” errors

– **Solution:** Implement conversation summarization or sliding window approach

public async Task SummarizeConversationAsync(ChatHistory history)

{

var summaryPrompt = $"""

Summarize this conversation in 2-3 sentences:

{string.Join("\n", history.Select(m => $"{m.Role}: {m.Content}"))}

""";

var chatService = kernel.GetRequiredService();

var result = await chatService.GetChatMessageContentAsync(

new ChatHistory { new(AuthorRole.User, summaryPrompt) },

kernel: kernel);

return result.Content ?? string.Empty;

}

**Pitfall 2: Credentials Exposed in Code**

– **Problem:** Hardcoding API keys in source code

– **Solution:** Always use Azure Key Vault or user secrets in development

// ❌ WRONG

var apiKey = "sk-abc123...";

// ✅ CORRECT

var apiKey = configuration["AzureOpenAI:ApiKey"]

?? throw new InvalidOperationException("API key not configured");

**Pitfall 3: Unhandled Async Deadlocks**

– **Problem:** Blocking on async calls with `.Result` or `.Wait()`

– **Solution:** Always use `await` in async contexts

// ❌ WRONG

var response = chatService.SendMessageAsync(message).Result;

// ✅ CORRECT

var response = await chatService.SendMessageAsync(message);

**Pitfall 4: Memory Leaks with Kernel Instances**

– **Problem:** Creating new Kernel instances repeatedly

– **Solution:** Register as singleton in DI container

// ✅ CORRECT

services.AddSingleton(kernel);

## Performance & Scalability Considerations

### Caching Responses

Implement caching for frequently asked questions:

using Microsoft.Extensions.Caching.Memory;

public class CachedChatService(

Kernel kernel,

IMemoryCache cache)

{

private const string CacheKeyPrefix = "chat_response_";

private const int CacheDurationMinutes = 60;

public async Task SendMessageAsync(string userMessage)

{

var cacheKey = $"{CacheKeyPrefix}{userMessage.GetHashCode()}";

if (cache.TryGetValue(cacheKey, out string? cachedResponse))

{

return cachedResponse!;

}

var chatCompletionService = kernel.GetRequiredService();

var chatHistory = new ChatHistory { new(AuthorRole.User, userMessage) };

var response = await chatCompletionService.GetChatMessageContentAsync(

chatHistory,

kernel: kernel);

var content = response.Content ?? string.Empty;

cache.Set(cacheKey, content, TimeSpan.FromMinutes(CacheDurationMinutes));

return content;

}

}

### Batch Processing for High Volume

Process multiple requests efficiently:

public class BatchChatService(Kernel kernel)

{

public async Task<List> ProcessBatchAsync(

List messages,

int maxConcurrency = 5)

{

var semaphore = new SemaphoreSlim(maxConcurrency);

var tasks = messages.Select(async message =>

{

await semaphore.WaitAsync();

try

{

var chatService = new ChatService(kernel);

return await chatService.SendMessageAsync(message);

}

finally

{

semaphore.Release();

}

});

return (await Task.WhenAll(tasks)).ToList();

}

}

Monitoring and Observability

Add structured logging for production diagnostics:

public class ObservableChatService(

Kernel kernel,

ILogger logger)

{

public async Task SendMessageAsync(string userMessage)

{

var stopwatch = System.Diagnostics.Stopwatch.StartNew();

try

{

logger.LogInformation(

"Processing message: {MessageLength} characters",

userMessage.Length);

var chatCompletionService = kernel.GetRequiredService();

var chatHistory = new ChatHistory { new(AuthorRole.User, userMessage) };

var response = await chatCompletionService.GetChatMessageContentAsync(

chatHistory,

kernel: kernel);

stopwatch.Stop();

logger.LogInformation(

"Message processed in {ElapsedMilliseconds}ms",

stopwatch.ElapsedMilliseconds);

return response.Content ?? string.Empty;

}

catch (Exception ex)

{

stopwatch.Stop();

logger.LogError(

ex,

"Error processing message after {ElapsedMilliseconds}ms",

stopwatch.ElapsedMilliseconds);

throw;

}

}

}

Practical Best Practices

**1. Separate Concerns with Interfaces**

public interface IChatService

{

Task SendMessageAsync(string message);

void ClearHistory();

}

public class ChatService : IChatService

{

// Implementation

}

// Register

services.AddScoped<IChatService, ChatService>();

2. Use Configuration Objects for Settings

public class AzureOpenAIOptions

{

public string Endpoint { get; set; } = string.Empty;

public string ApiKey { get; set; } = string.Empty;

public string DeploymentName { get; set; } = string.Empty;

public double Temperature { get; set; } = 0.7;

public int MaxTokens { get; set; } = 2000;

}

// In appsettings.json

{

"AzureOpenAI": {

"Endpoint": "https://...",

"ApiKey": "...",

"DeploymentName": "gpt-4o",

"Temperature": 0.7,

"MaxTokens": 2000

}

}

// Register with options pattern

services.Configure(configuration.GetSection("AzureOpenAI"));

**3. Implement Unit Testing**

using Xunit;

using Moq;

public class ChatServiceTests

{

[Fact]

public async Task SendMessageAsync_WithValidInput_ReturnsNonEmptyResponse()

{

// Arrange

var mockKernel = new Mock();

var mockChatCompletion = new Mock();

mockChatCompletion

.Setup(x => x.GetChatMessageContentAsync(

It.IsAny(),

It.IsAny(),

It.IsAny(),

It.IsAny()))

.ReturnsAsync(new ChatMessageContent(AuthorRole.Assistant, "Test response"));

mockKernel

.Setup(x => x.GetRequiredService())

.Returns(mockChatCompletion.Object);

var service = new ChatService(mockKernel.Object);

// Act

var result = await service.SendMessageAsync("Hello");

// Assert

Assert.NotEmpty(result);

Assert.Equal("Test response", result);

}

}

**4. Document Your Plugins**

///

/// Provides mathematical operations for AI function calling.

///

public class CalculatorPlugin

{

///

/// Adds two numbers and returns the sum.

///

///The first operand

///The second operand

/// The sum of a and b

[KernelFunction("add")]

[Description("Adds two numbers together")]

public static int Add(

[Description("The first number")] int a,

[Description("The second number")] int b)

{

return a + b;

}

}

Conclusion

You now have a comprehensive foundation for building AI-native .NET applications. The architecture you’ve learned—combining Semantic Kernel for orchestration, Azure OpenAI for intelligence, and ML.NET for specialized tasks—provides flexibility, maintainability, and production-readiness.

**Next Steps:**

1. **Deploy to Azure:** Use Azure Container Instances or App Service to host your application

2. **Add Monitoring:** Integrate Application Insights for production observability

3. **Implement Advanced Patterns:** Explore agent frameworks and multi-turn planning

4. **Optimize Costs:** Monitor token usage and implement caching strategies

5. **Scale Horizontally:** Design for distributed processing with Azure Service Bus or Azure Queue Storage

The AI landscape evolves rapidly. Stay current by following Microsoft’s Semantic Kernel repository, monitoring Azure OpenAI updates, and experimenting with new model capabilities as they become available.

You might be interest at

AI-Augmented .NET Backends: Building Intelligent, Agentic APIs with ASP.NET Core and Azure OpenAI

Headless Architecture in .NET Microservices with gRPC

🔗 External Resources You Can Include

Azure OpenAI Service

https://learn.microsoft.com/azure/ai-services/openai/

Semantic Kernel GitHub

https://github.com/microsoft/semantic-kernel

ML.NET Official Docs

https://learn.microsoft.com/dotnet/machine-learning/